Serving Models with Tensorflow API

Recently we’ve been building a model serving framework to support online model serving. Our models are trained with Tensorflow, and we want to serve them with high performance.

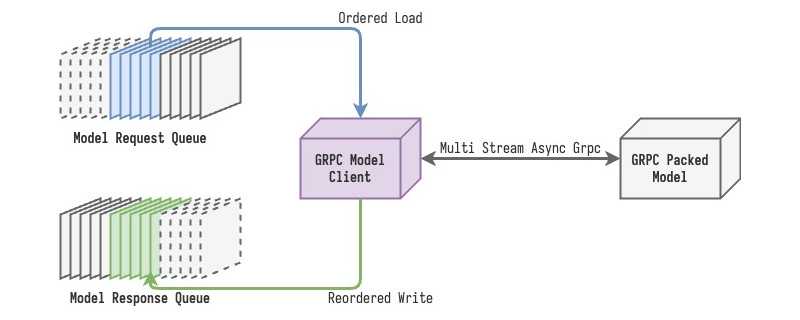

The input of the model is given by a Kafka topic, and the output is sent to another Kafka topic. The model serving framework should be able to handle Kafka messages, so it’s written in Java. The Tensorflow Serving API is written with gRPC. If we compile the protobuf protocol file into Java code, we can talk to our model written in Python. We initiated a project at tensorflow-serving-api to play the role of the library. Then it could be integrated with Kafka clients to serve models.

With the power of gRPC, we can serve models with high performance. That’s proven a right choice for us.