My Understanding of Knowledge Distilling

Introduction

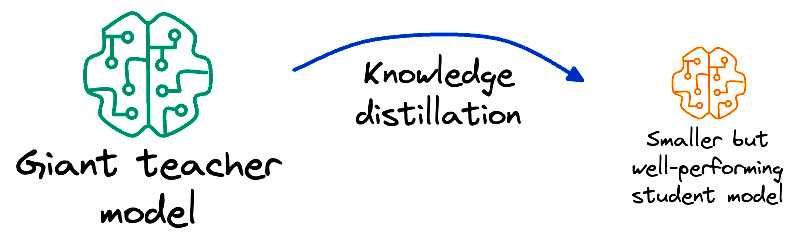

Knowledge Distilling is a widely used technique in machine learning. It is a method to compress a large model into a smaller one.

The large model, the teacher model, is usually a deep neural network with many parameters. The smaller model, which we call the student model, is a shallow neural network with fewer parameters. The motivation behind knowledge distilling is to transfer the knowledge learned by the teacher model to the student model so that the student model can achieve similar performance to the teacher model while being more computationally efficient.

The traditional way to train a neural network is to minimize the loss function between the predicted output and the ground truth. However, in knowledge distilling, we also introduce an additional loss function to minimize the difference between the output of the teacher model and the student model. This additional loss function is called the distillation loss. With the distillation loss, the student model can learn not only from the ground truth labels but also from the soft labels generated by the teacher model.

Distilling Loss Function

Annotations

- : loss

- : temperature

- : logits of the student model

- : logits of the teacher model

- : output vector of the student model

- : output vector of the teacher model

Distilling Loss Function

When and , and the temperature is big enough, the distillation loss function can be approximated as: